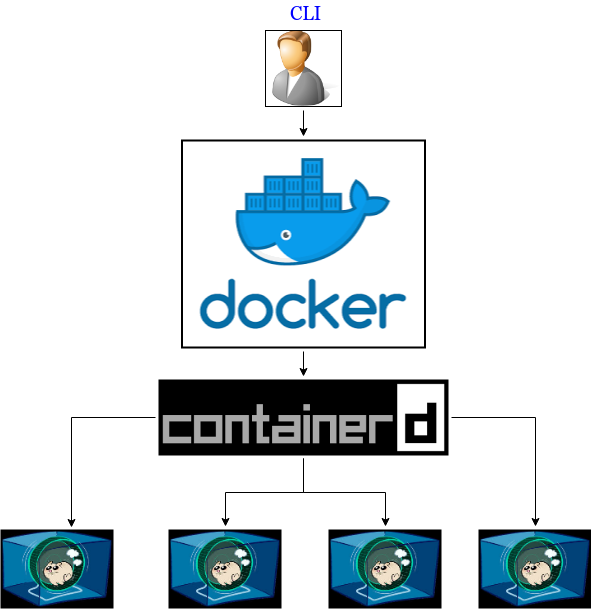

In container environments (Docker or Kubernetes) we need to deploy quickly, and the most important thing for this is their size. We must reduce them so that the download of them from the registry and their execution is slower the larger the container is and that minimally affects the complexity of the relations between services.

For a demonstration, we going to use a PowerDNS-based solution, I find that the original PowerDNS-Admin service container (https://github.com/ngoduykhanh/PowerDNS-Admin/blob/master/docker/Production/Dockerfile) has the following features:

- The developer is very active and includes python code, javascript (nodejs) and css. The images in docker.hub are obsolete with respect code.

- Production Dockerfile does not generate a valid image

- It is based on Debian Slim, which although deletes a large number of files, is not sufficient small.

In Docker Hub there are images, but few are sufficient recent or do not use the original repository so the result comes from an old version of the code. For example, the most downloaded image (really/powerdns-admin) does not take into account the modifications of the last year and does not use yarn for the nodejs layer.

First step: Decide whether to create a new Docker image

Sometimes it is a matter of necessity or time, in this case it has been decided to create a new image taking into account the above. As minimum requirements we need a GitHub account (https://www.github.com), a Docker Hub account (https://hub.docker.com/) and basic knowledge of git as well as advanced knowledge of creating Dockerfile.

In this case, https://github.com/aescanero/docker-powerdns-admin-alpine and https://cloud.docker.com/repository/docker/aescanero/powerdns-admin is created and linked to automatically create the images when a Dockerfile is uploaded to GitHub.

Second step: Choose the base of a container

Using a base of very small size and oriented to the reduction of each of the components (executables, libraries, etc.)… Read the rest “Docker: Reduce the size of a container”