After a first article (Kubernetes Dashboards 1) on generalist Kubernetes Dashboards, this article focuses on those dashboards that are geared to certain needs.

Specifically we will focus on three dashboards that go to three needs: Look into the status of a large number of pods, see the relationships between objects and get an overview on a platform kubernetes on docker.

Kubernetes Dashboards

Kube-ops-view

With 1200 stars in Github, this project presents a very basic dashboard thinking of large server farms, where we have an important volume of pods that we need to review at a glance.

How to install

We create a namespace to install kube-ops-view:

kubectl create namespace kube-ops-view --dry-run -o yaml | kubectl apply -f -We create a system account with administrator capabilities

kubectl create -n kube-ops-view serviceaccount kube-ops-view-sa --dry-run -o yaml | kubectl apply -f -

kubectl create clusterrolebinding kube-ops-view-sa --clusterrole=cluster-admin --serviceaccount=kube-ops-view:kube-ops-view-sa --dry-run -o yaml | kubectl apply -f -And we do execution of a deployment together with a service for its publication

cat <<EOF |kubectl apply -n kube-ops-view -f -

---

apiVersion: v1

kind: Service

metadata:

name: kube-ops-view

namespace: kube-ops-view

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app: kube-ops-view

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: kube-ops-view

name: kube-ops-view

spec:

replicas: 1

selector:

matchLabels:

app: kube-ops-view

template:

metadata:

labels:

app: kube-ops-view

spec:

serviceAccountName: kube-ops-view-sa

containers:

- image: hjacobs/kube-ops-view:0.11

name: kube-ops-view

ports:

- containerPort: 8080Despite being a single service (without authentication, important), its container is similar in size to the solutions shown before:

docker.io/hjacobs/kube-ops-view 0.11 fd70a70b6d70d 40.8MBSo its deployment must be fast.

Features

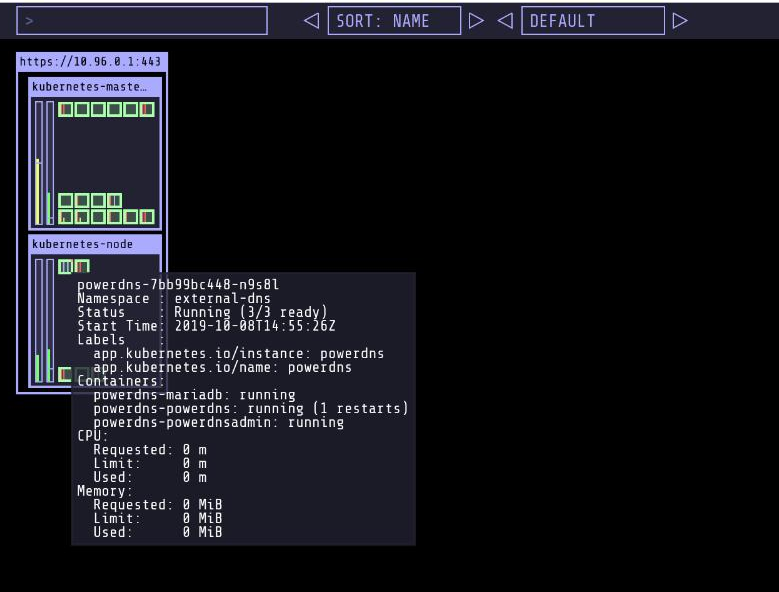

Its interface, shown in the following figure is minimal:

Without options to enter the objects or to edit, the information appears when passing over the “boxes”.

Easy to use

Clearly limited for those who start in Kubernetes or want additional information about objects, but very useful for those who want to visualize large volumes of pods.… Read the rest “Kubernetes dashboards 2”