After a first article (Kubernetes Dashboards 1) on generalist Kubernetes Dashboards, this article focuses on those dashboards that are geared to certain needs. Specifically we will focus on three dashboards that go to three needs: Look into the status of a large number of pods, see the relationships between objects and get an overview on a platform kubernetes on docker. Kubernetes Dashboards Kube-ops-view With 1200 stars in Github, this project presents a very basic dashboard thinking of large server farms, where we have an important volume of pods that we need to review at a glance. How to install We create a namespace to install kube-ops-view: We create a system account with administrator capabilities And we do execution of a deployment together with a service for its publication Despite being a single service (without authentication, important), its container is similar in size to the solutions shown before: So its deployment must be fast. Features Its interface, shown in the following figure is minimal: Without options to enter the objects or to edit, the information appears when passing over the “boxes”. Easy to use Clearly limited for those who start in Kubernetes or want additional information about objects, but very useful for those who want to visualize large volumes of pods. Kubeview Although with only 150 stars on github, this project focuses on representing the relationships between objects in Kubernetes. Being very interesting for demonstrations. How to install Until the Helm Chart is developed (https://github.com/benc-uk/kubeview/tree/master/deployments/helm) It is installed in the “default” namespace by installing two files: The first file includes the user account kubeview and the corresponding permissions, the second the deployment together with a service type “LoadBalancer”. The image size is small and its deployment is fast. Features Kubeview is simple and its graphic configuration makes it very interesting to teach Kubernetes or presentations. Its menu consists only of a name space selector and a window refresh time. When we select a namespace it will show us all the objects that said namespace has. In this way we can at a glance see the relationships and elements such as the IPs of publication of the services. When we select an object (for example a service) it shows descriptive information about it. Easy to use Very basic and with functions limited to training or demonstration. It has no major pretensions, which are to show the relationships between objects. Weave-Scope This interesting […]

Category: Series

Series of explanatory articles on a technological theme with a common objective.

Kubernetes dashboards 1

One of the first difficulties when starting to work with Kubernetes is the lack of understandable tools to manage Kubernetes. Kubernetes dashboards (platform management tools) become the entry point for those who want to learn Kubernetes. In this series of articles we will examine some of the most used dashboards, including some more specific (octant, kontena) and leaving those oriented to PaaS / CaaS (Rancher, Openshift) because in both cases the scope of entry level Kubernetes tools is exceeded. Kubernetes dashboards Kubernetes Dashboard With more than 6000 stars on GitHUb, the official Kubernetes dashboard is the standard option for this type of solutions, because of its dependencies and its lack of compatibility with the current versions of Kubernetes we will only focus on v2 versions -beta3. How to install Executing the command: It is installed in the “kubernetes-dashboard” namespace and to obtain the metrics it uses the “dashboard-metrics-scraper” service that is installed in the same namespace. It must be accessed via external IP (LoadBalancer or kubectl proxy service), or configure an Ingress with the same SSL certificates as the console (which complicates its deployment). The containers that are part of this solution are: So its deployment has to be fast, since although they are two services, they are small in size. Features It has a clean and clear interface, token authentication and a dashboard where on one side we have all the main elements of Kubernetes and in the main part of the panel a list of the selected elements in the selected namespace. Main panel: For convenience, it has a dark theme, among the elements it allows access to, we have security roles. In addition, each selected element has two actions: edit and delete. Role editing: The edit action gives us access to the object that defines the selected element in yaml or json formats. No specific editor is defined in any element that allows the management of the object for those not aware of its structure. Node Information: When we select a node we get a lot of information about it. Control panel over a namespace: Selecting a namespace without selecting any element will show us a status box of that namespace with the elements that form it and the status of each of them. Information about an item: The information is also exhaustive when we enter any object, in this case a service: Secrets manipulation: In some […]

How to publish Kubernetes with External DNS, MetalLB and Traefik.

Kubernetes with External DNS, MetalLB and Traefik will help us to have web applications (in a microservice environment or not) be published, since the basic requirements are to resolve the name of the computer and the web path that leads to the DNS. application. The big map After the steps taken in K3s: Simplify Kubernetes and Helm v3 to deploy PowerDNS over Kubernetes we are going to shape a more complete Kubernetes solution so that you can publish services under your own domain and route and be accessible from outside. Always using the minimum resources in this task. MetalLB MetalLB will allow us to emulate the power of the load balancers of the Cloud providers, the requirements of this solution are Kubernetes version 1.13 or higher, and must be no other network balancer operating and that the network controller is supported in the list indicated in https://metallb.universe.tf/installation/network-addons/, we must bear in mind that K3s includes flannel that is supported and that in the case of others like Weave some modifications are required. To install MetalLB you only need to apply the “yaml” file that deploy all the elements: And to activate MetalLB we must create a configuration (file pool.xml) that contains something like this: When applied with k3s kubectl apply -f pool.yml will configure MetalLB so that if there are services with “loadBalancer” they use one of the IPs defined in the specified range (in this case 192.168.9.240/28). MetalLB give us a great advantage over other types of local solutions, since it does not require the use of SDN (such as Kubernetes on VMware NSX) or specific servers for publishing (such as OpenShift, which in addition to SDN, need specific machines to publish services). Traefik Traefik It is a router service with multiple features such as: With K3s Traefik is automatically deployed when starting the master node, in the case of Kubernetes it will be done through Helm and for configuration we need a yaml file like the following: dashboard: enabled: “true” domain: “traefik-dashboard.DOMINIO” auth: basic: admin: $apr1$zjjGWKW4$W2JIcu4m26WzOzzESDF0W/ rbac: enabled: “true” ssl: enabled: “true” mtls: enabled: “true” optional: “true” generateTLS: “true” kubernetes: ingressEndpoint: publishedService: “kube-system/traefik” metrics: prometheus: enabled: “true” This configuration is very similar to the one that K3s deploys and we have added the dashboard where we can check the status of the routes and services configured. There are several important points: To execute the Chart with Helm 3 […]

Helm v3 to deploy PowerDNS over Kubernetes

In the article about PowerDNS on Docker or Podman, easy to run we leave pending its realization on Kubernetes, this is because Kubernetes service structure is much more complex than that Docker or Podman and therefore a completely different approach must be made. Package with Helm v3 The first thing to keep in mind is package applications so that the deployment doesn’t require extensive knowledge about Kubernetes (make life easier for the user, the developer) and the second is that we can have many users on the same environment wishing to raise the same application and we can’t create a package for each one, we must to be able to reuse the package we have created. In Kubernetes the package standard is Helm , that will allow us to manage deployments easily and be reusable by user, project, etc. The Helm package consists of: Helm v3 is the stable version of Helm (at the time of writing these lines Helm goes for version v3.0.2) but it has such interesting capabilities as life cycle management or not requiring integrated services in Kubernetes to deploy packages ( to do something similar in version 2 see How to launch a Helm Chart without install Tiller, which requires a job and a launcher pod with Tiller, which represents a greater complexity). Using Helm Once we have arranged our Kubernetes cluster (see Kubernetes: Create a minimal environment for demos or K3s: Simplify Kubernetes) we proceed to download the Helm binary on the master node with: And that’s it, we don’t have to install anything and then we can install the packages that we need directly, that is, we must bear in mind that Helm v3 doesn’t automatically create namespaces because is considered that this is not a deployment task but part of infrastructure management. In the case of Powerdns, whose dependency is a mariadb database and we can optionally attach a dashboard with PowerDNS-Admin, we’ll require this type of packages, because are useful if we want customers to have their own managed DNS server by themselves. In future articles we will see that this server is also integrable with External-DNS, which will allow us to publish in PowerDNS the modifications to the Kubernetes input proxies (for example an Ingress with Traefik). To create a package with the minimum structure we do: That will create an infrastructure with the elements indicated above, such as the PowerDNS […]

PowerDNS on Docker or Podman, easy to run

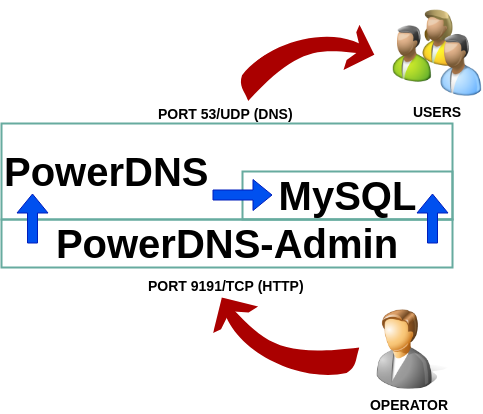

What is PowerDNS and DNS as a critical service PowerDNS is a DNS server, an especially critical service in any infrastructure that we want to deploy, since this is the main connection between services and operators. If of all the options we find when we look for a DNS server (we can see a long list at https://en.wikipedia.org/wiki/Comparison_of_DNS_server_software) we look for the following three conditions: can be easily managed, simple deployment and OpenSource. We are going to stay in only one option: PowerDNS and for its management PowerDNS-admin. PowerDNS (whose development can be seen at https://github.com/PowerDNS/pdns and has more than 1800 stars) is a powerful DNS server whose most interesting features for management are a web service with a powerful API and be able to store information in databases, such as MySQL. And we select PowerDNS-Admin for two reasons: It is actively maintained (https://github.com/ngoduykhanh/PowerDNS-Admin, more than 750 stars) and visually it is a more friendly environment by having similar format as RedHat tools are currently using. Why PowerDNS with PowerDNS-Admin? Because they make up a powerful package where we have the following advantages: To these advantages we must add the existence of multiple containers images that greatly facilitate how to deploy and update this solution. Deploy PowerDNS with Docker-Composer La solución con PowerDNS consta de tres partes: el servidor dns, para el cual haremos uso de contenedor pschiffe/pdns-mysql:alpine (https://github.com/pschiffe/docker-pdns/tree/master/pdns), el servidor de base de datos mariadb a través del contenedor yobasystems/alpine-mariadb(https://github.com/yobasystems/alpine-mariadb) y el contenedor aescanero/powerdns-admin que hemos explicado en un post anterior (https://www.disasterproject.com/index.php/2019/08/docker-reducir-tamano/). The solution with PowerDNS consists of three parts: the dns server, for which we will use the pschiffe/pdns-mysql:alpine (https://github.com/pschiffe/docker-pdns/tree/master/pdns), the mariadb database server through the yobasystems/alpine-mariadb(https://github.com/yobasystems/alpine-mariadb) and the aescanero/powerdns-admin container that we explained in a previous post (Docker: Reduce the size of a container). It is important to indicate that the three containers have active maintenance and are small in size, which allows rapid deployment. Ports 53/UDP and 9191/TCP must be available on the machine running the containers. In order to provide storage space in the database, a volume has been added to the mariadb database, in order to obtain persistence in the configuration of the elements that make up the solution. Once the solution is deployed, we access it at the URL: http: // WHERE_IS_RUNNING_DOCKER: 9191 where the login screen appears in the last section. To eliminate the deployment made (except the database we have in […]

Kubernetes: adventures and misadventures of patching (kubectl patch).

Kubernetes is a powerful container orchestration tool where many different objects are executed and at some point in time we will be interested in modifying. For this, Kubernetes offers us an interesting mechanism: patch, we are going to explain how to patch and we will see that this tool is far from being enough tool as would be desirable. Patching operations in Kubernetes According to the Kubernetes documentation and the Kubernetes API rules, three types are defined (with –type): Strategic This is the type of patch that Kubernetes uses by default and is a native type, defined in the SIG. It follows the structure of the original object but indicating the changes (by default join: merge, that’s why it is known as strategic merge patch) in a yaml file. For example: if we have the following service (in service.yml file): We are going to use the command kubectl patch -f service.yml –type=”strategic” -p “$(cat patch.yml)” –dry-run -o yaml to allow us to perform tests on objects without the danger of modifying its content in the Kubernetes cluster. In this case, if we want this service to listen for an additional port, we will use the “merge” strategy and apply the following patch (patch.yml): As we can see, the patch only follows the service object as far as we want to make the change (the “ports” array) and being a “strategic merge” type change it will be added to the list as seen in the command dump: But if instead of “merge” we use “replace” what we do is eliminate all the content of the subtree where we are indicating the label “$patch: replace” and instead, directly put the content of the patch. For example to change the content of the array we use the file “patch.yml”: In this example, the entire contents of “ports:” are deleted and instead the object defined after the “$patch: replace” tag is left, although the order is not important, the tag can go back and has the same effect . The result of the above is: Finally, “delete” indicated by “$patch: delete” deletes the content of the subtree, even if there are new content, it is not added. The result will be the empty spec content: Merge This type of patch is a radical change compared to “strategic” because it requires the use of JSON Merge Patch (RFC7386), it can be applied as yaml or […]