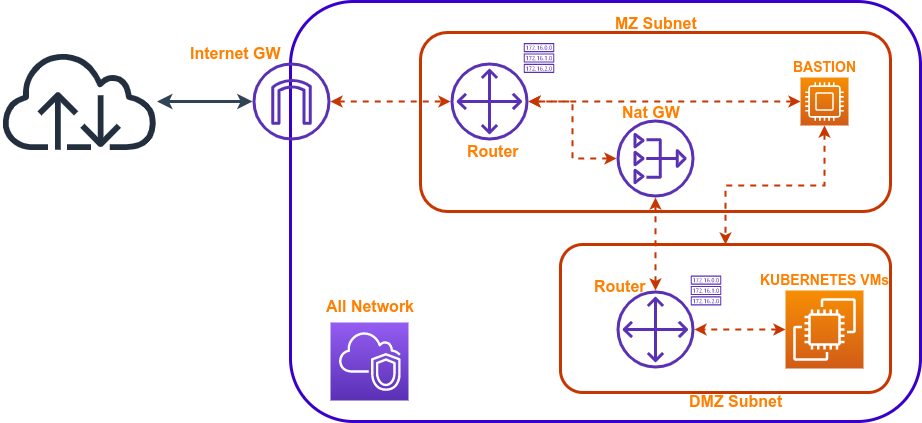

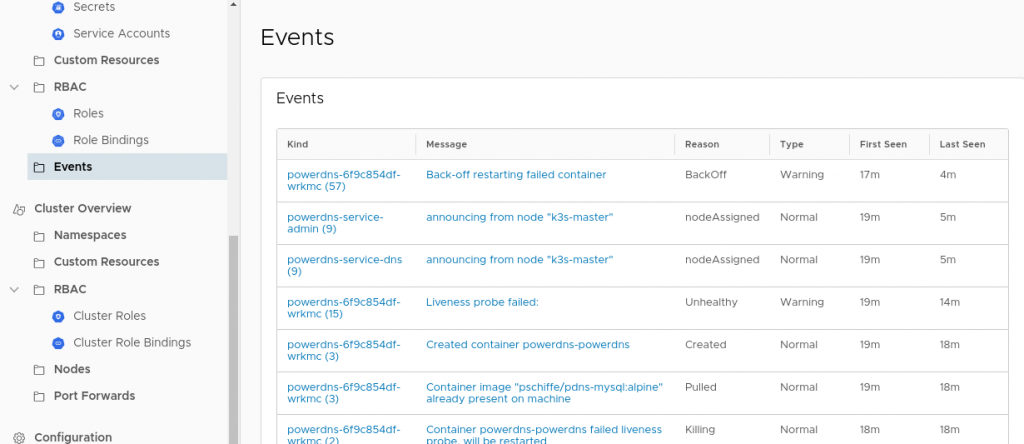

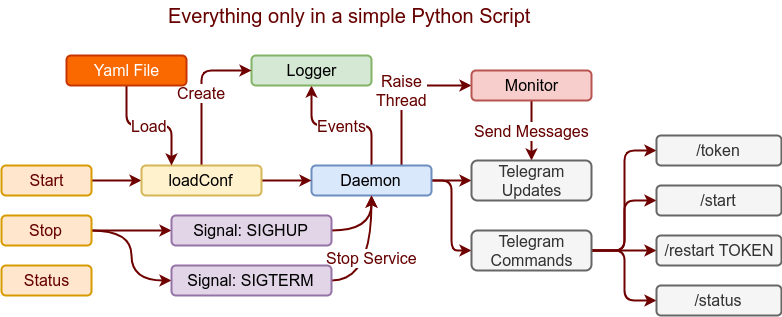

En los artículos anteriorer de como Monitorizar una web con Python y que nos avise por Telegram (Monitorizar web con Python y Telegram 1), hemos hablado de como crear un script, y nos falta lo más importante, el bot de telegram, el monitor y el ciclo de vida del script.

Python y Telegram: Crear el script

En estos punto vamos a proceder a crear el script, es importante ya que la intención no es llegar a desplegar una aplicación, sino tener un ejemplo de como con un script se pueden realizar tareas sin necesidad de desplegar aplicaciones que nos pueden llevar incluso más tiempo el asegurar su comportamiento.

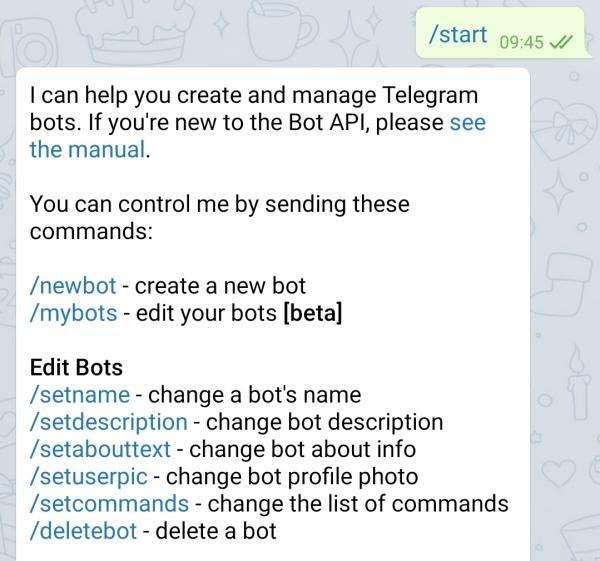

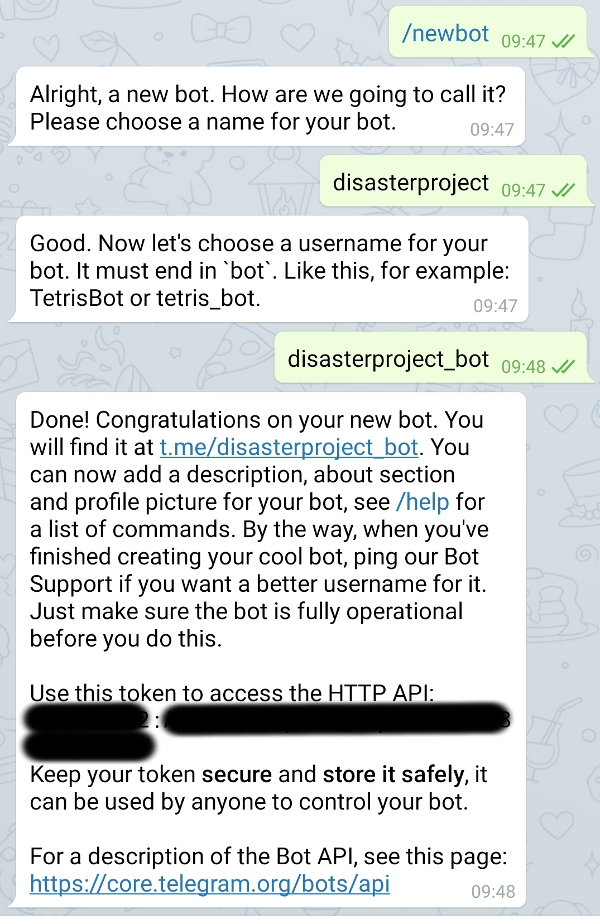

Conectar con Telegram

El momento correcto de conectar con Telegram es el momento en el que levantamos el contexto del demonio que hemos visto en Monitorizar web con Python y Telegram 2, para ello vamos a realizar los siguiente cambios:

with context:

logger = getLogger()

updater = Updater(token=cfg['TOKEN'], use_context=True)

dispatcher = updater.dispatcher

start_handler = CommandHandler('start', start)

dispatcher.add_handler(start_handler)

status_handler = CommandHandler('status', status)

dispatcher.add_handler(status_handler)

token_handler = CommandHandler('token', token)

dispatcher.add_handler(token_handler)

restart_handler = CommandHandler('restart', restart)

dispatcher.add_handler(restart_handler)

check_thread = threading.Thread(

target=monitorProc, args=(updater, logger, ))

check_thread.start()

do_main_program(logger, updater)Podemos ver una definición principal que es updater, este objeto se utilizará en todas las llamadas realizadas a Telegram, el segundo punto es el uso del dispacher para definir comandos del bot y que función python va a ejecutar la respuesta a dicho comando del bot, definimos 4 comandos para el bot:

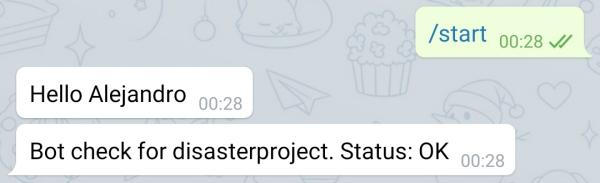

start

Esta función debe mostrar un mensaje de presentación cuando se accede por primera vez al bot o cuando se ejecuta directamente. Lo definimos en el contexto con:

dispatcher = updater.dispatcher

start_handler = CommandHandler('start', start)

dispatcher.add_handler(start_handler)Y podemos ver como añadimos por un lado un manejador de los comandos del bot “CommandHandler” cuyos argumentos son el comando del bot y la función que se llamará cuando el bot reciba dicho comando:

def start(update, context):

update.message.reply_text(